Accelerating AI Deployment with DevOps: Best Practices on AWS

I. Introduction

The amalgamation of DevOps practices with the ever-evolving landscape of Machine Learning (ML) heralds a new era in AI deployment. This comprehensive exploration, stemming from a recent session at AWS Community Day Vadodara, delves into the intricacies of why DevOps is crucial for ML success and explores the confluence of these two dynamic realms.

The Intersection of DevOps and Machine Learning

Why Bring DevOps into ML?

Faster Time-to-Market for ML Models: DevOps principles streamline the development pipeline, reducing bottlenecks, and ensuring swift delivery of ML models to production.

Iterative Model Improvement with Continuous Feedback Loops: DevOps fosters a culture of continuous improvement, allowing ML models to evolve based on real-world feedback and changing requirements.

Consistent, Reliable Model Deployments: Through automation and standardized processes, DevOps ensures consistent and reliable deployment of ML models, mitigating risks associated with manual interventions.

The Confluence of DevOps & Machine Learning

Adaptability & Scalability: DevOps provides the flexibility and scalability needed in the dynamic landscape of ML, adapting to changing requirements and seamlessly accommodating growth.

Cost Efficiency & Risk Mitigation: Efficient resource utilization and automated processes contribute to cost savings, while standardized deployments mitigate the risks associated with manual errors.

Innovation Acceleration: DevOps accelerates the pace of innovation by fostering collaboration, automating mundane tasks, and allowing teams to focus on creating cutting-edge ML solutions.

DevOps Best Practices for AI Deployment on AWS

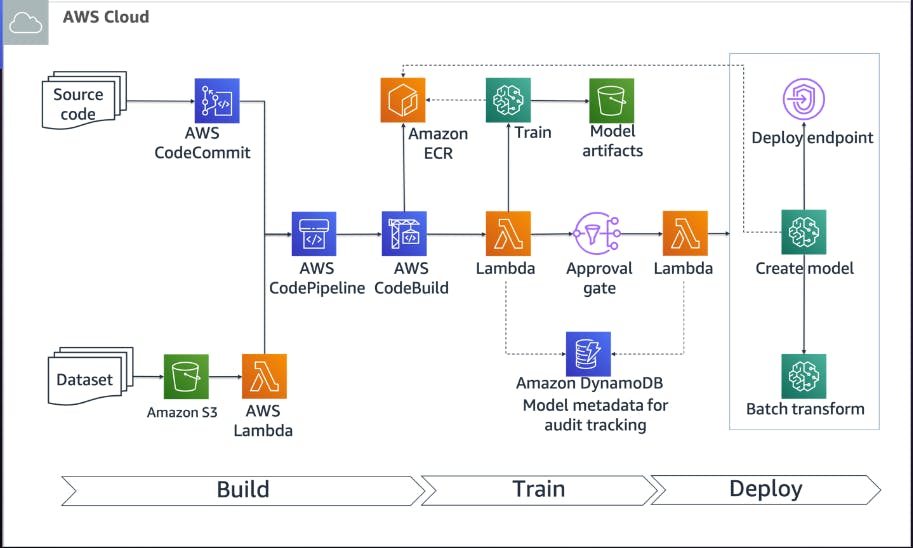

AWS CodePipeline: Bringing CI/CD to Life

AWS CodePipeline automates the end-to-end release process, ensuring a smooth flow from source code to production deployment. This CI/CD service becomes the backbone for continuous integration and delivery in ML workflows.

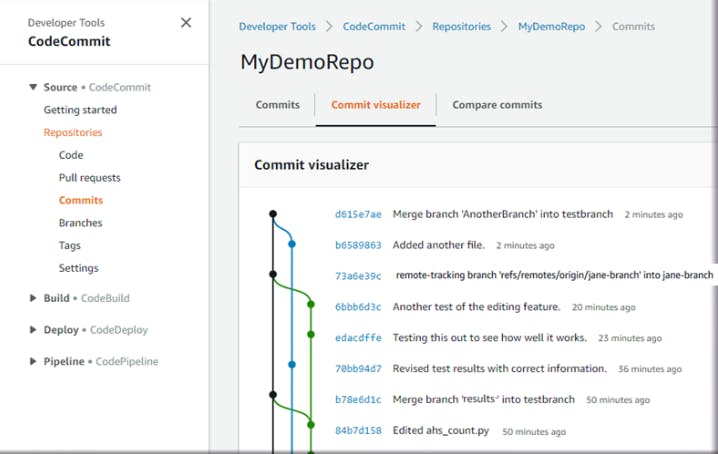

AWS CodeCommit: Version Control for AI

Version control is critical in ML projects. AWS CodeCommit provides a secure and scalable version control service, enabling teams to collaborate effectively while maintaining version history.

AWS CDK: Automating Infrastructure

The AWS Cloud Development Kit (CDK) allows developers to define cloud infrastructure as code. For ML projects, this translates to the ability to automate the creation and management of resources, ensuring consistency and reproducibility.

AWS CloudWatch: Eyes on Your AI

Monitoring is a crucial aspect of AI deployment. AWS CloudWatch provides insights into the performance of deployed models, helping teams detect issues early and optimize resource usage.

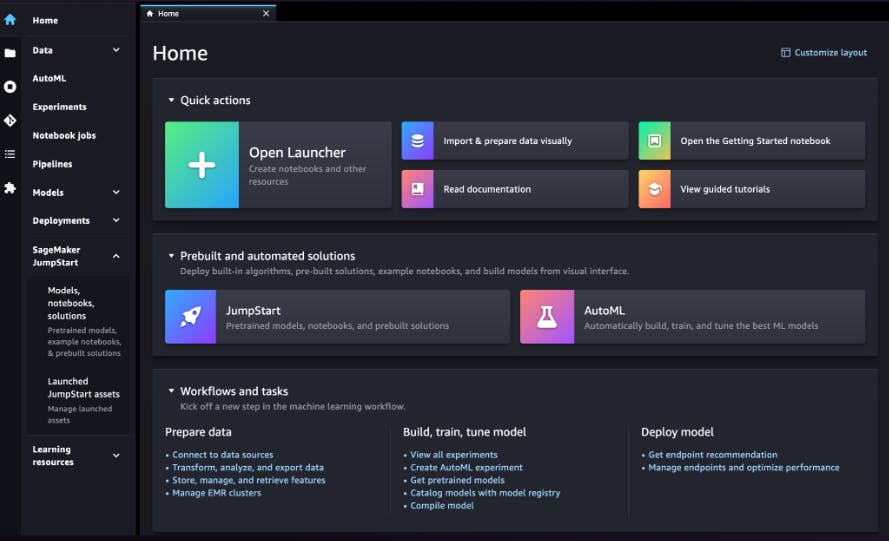

ML Best Practices for AI Deployment on AWS

Model Training

The article delves into the intricacies of model training, discussing techniques, algorithms, and considerations for optimizing this foundational step in the ML lifecycle.

Model Deployment

Deployment is a critical phase. Here, the focus is on best practices for deploying ML models on AWS, ensuring scalability, reliability, and efficient use of resources.

Machine Learning is Very Expensive?

Dispelling a common misconception, the article explores cost optimization strategies in ML projects, leveraging AWS services to achieve efficiency without compromising on performance.

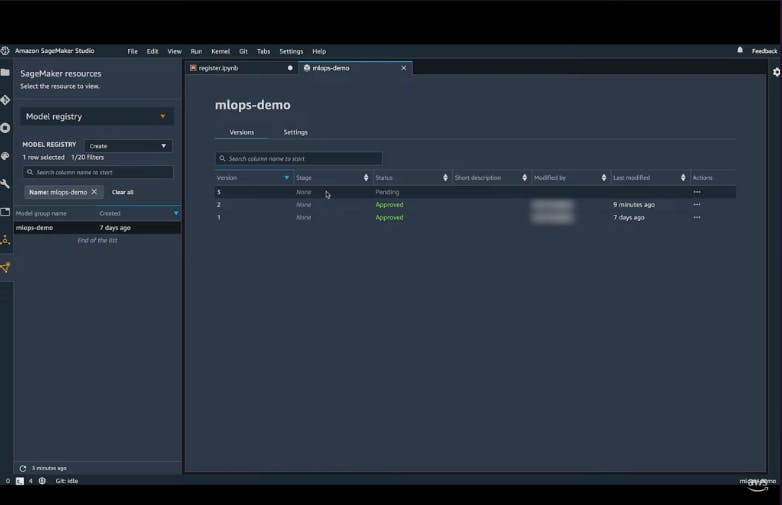

Model Registering and Tracking

The importance of model registration and tracking is highlighted, emphasizing tools and practices to maintain a clear record of model versions and changes.

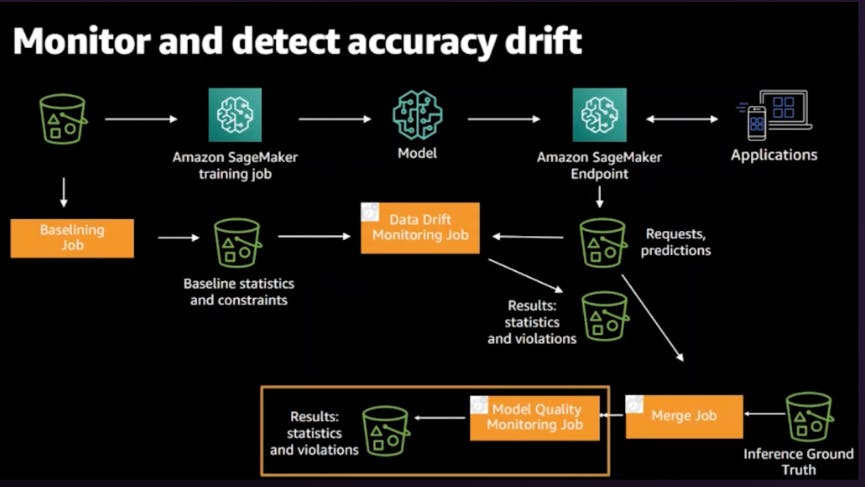

Model Monitoring

Monitoring is essential for ensuring the ongoing health and performance of ML models. The article explores AWS services that facilitate robust model monitoring.

Key Takeaways and Q&A

Workflows for Synchronizing the Codebase are Super Important

Insights into the significance of well-synchronized codebase workflows in the context of ML and DevOps, ensuring seamless collaboration and integration.

IAC Helps for Reproducibility

Infrastructure as Code (IAC) emerges as a key enabler for reproducibility in ML projects, ensuring that environments can be recreated reliably.

Model Building is a Small Part of Machine Learning

Highlighting the broader perspective, the article underscores that model building is just one component of the comprehensive ML lifecycle.

Model Registry, Model Tracking, Model Monitoring are Super Important

Summarizing key considerations for effective ML operations, including the pivotal roles of model registry, tracking, and monitoring.

Conclusion

In conclusion, this comprehensive exploration unravels the intricate relationship between DevOps and Machine Learning. The article provides actionable insights into best practices on AWS, empowering teams to navigate the complexities of AI deployment successfully.

Note: This insightful exploration was recently presented at AWS Community Day Vadodara, marking a vibrant interaction with the community. The rich tapestry of insights and collaborative learning continues to thrive in the ever-evolving landscape of AI and DevOps.